AI Acceleration

3D Art & Animation | Visual Branding | Illustration | UX Research

While leading the design team at Discovery Education for five years I constantly sought more cost effective and efficient ways to animate the characters for the products I led. Follow the evolution of technologies and efficiencies gained for the company in order to add FUN to our products!

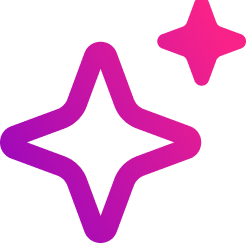

Our small creative team faced challenges in producing high-quality animations and still art efficiently. While our character designs were beloved and prolific throughout our products, the manual effort required to generate still images and animate them slowed down our workflow and limits our ability to scale.

By identifying and implementing the right AI software to train models of our proprietary characters, we could automate and accelerate the creation of still images and animations. This would allow us to maintain creative quality while increasing output speed, helping us overcome resource limitations and expand our visual storytelling.

We had so many requests coming in for still art and animation there was just no way we could fulfill on all of the needs.

Characters had become a cornerstone of our EdTech product experience, serving not only as visual assets but as powerful engagement tools that enhance learning outcomes. These characters help humanize digital content, foster emotional connections with learners, and make complex concepts more accessible through storytelling and visual cues.

Continued investment in these characters is essential for several strategic reasons:

Enhanced Learner Engagement

Animated characters capture attention and sustain interest, especially among younger audiences. Their presence can transform passive content into interactive experiences, increasing retention and motivation.

Sticky products = customer retention.

Brand Differentiation

Our characters are unique to our platform and contribute to a recognizable and memorable brand identity. Investing in their development strengthens our market position and sets us apart from competitors.

Scalability Across Products

Once developed, AI generated character assets can be reused and adapted across multiple courses, modules, and platforms—maximizing ROI and ensuring consistency in tone and style.

Support for Diverse Learning Styles

Visual storytelling through animation supports auditory, visual, and kinesthetic learners, making our content more inclusive and effective.

Potential for Expansion

Well-developed characters open doors to future opportunities such as gamification, merchandise, and cross-platform storytelling, creating new revenue streams and brand loyalty.

As our customer needs adapted and shifted in some ways to cater to a state-by-state focus the art needed may need to increase and/or localize. An AI assisted workflow would help with that.

We created the DE Characters and have integrated them into many of our products with welcoming enthusiasm across many teams.

Characters were still 2D drawings.

A full color scene with multiple characters cost $1,600 to create.

We were doing “draw-overs” of Felipe Hansen’s original art created in 2020-2021, but were not producing any new poses.

We gained efficiencies by getting our DE Characters into the 3D software Blender and using our first AI mo-cap software.

The Team created 3D models of the characters in Blender.

They were rigged with a skeleton and weight painted by Weiwei Zhou in order to prep for animation capabilities.

The first animations with the Immersive Team were done by Dan Birchinall in collaboration with our Video Team in NTK Jr. series using Weiwei’s model.

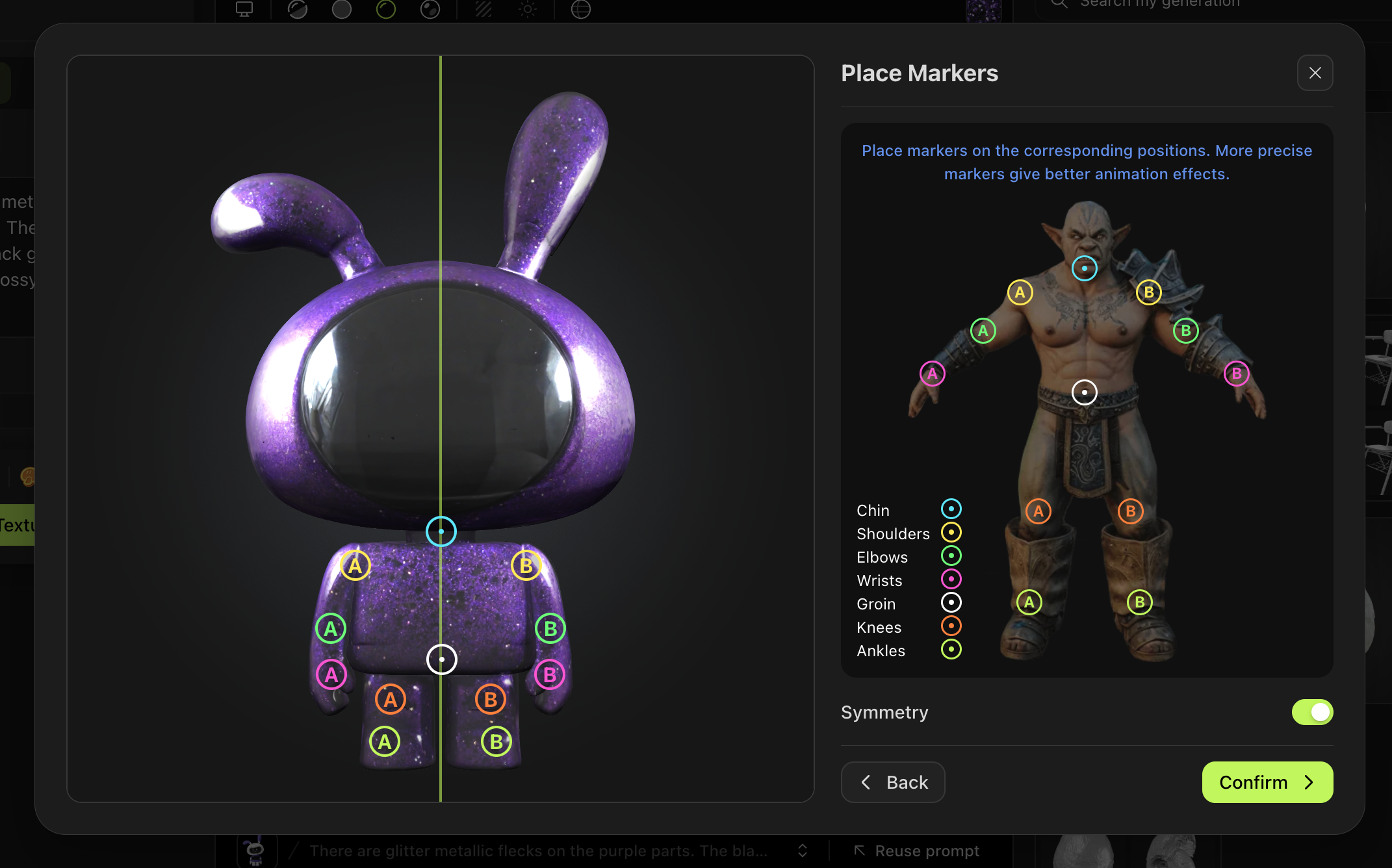

We started exploring using more advanced AI software to speed up the creation and delivery process.

We started using QuickMagic and motion capture of our own bodies with smartphones and supplied these upon request to Product and Marketing teams. to animate the DE Characters. Animation by the fabulous Weiwei Zhou.

We realized the limitations of QuickMagic.

We attended GDC and researched new programs to gain efficiency. We did trials of these softwares and recorded positives and negatives.

The Process

Traditional Motion capture is awesome! When we attended GDC we saw incredible set-ups that allowed for unbelievable accuracy in capture and translation to the characters. However the expensive camera and additional equipment was beyond the budget for an EdTech company.

For several years we used mobile phone motion capture and various AI programs to input and animate the DE Characters. These programs are still quite time consuming.

Would a program like OpenArt AI help us speed up our process and improve cost and quality as well?

Traditional Motion Capture

($$$$$$$$)

AI Motion Capture

(using our own bodies)

AI Animation ?

(text prompt and image)

Traditional MoCap

Because the costs of traditional motion capture was beyond our reach, we use Quick Magic to capture motion with our own smart phones, and the AI software turns that into frames for animation.

We did have some success with QuickMagic

Mocap & Animation by Weiwei Zhou

Meshy is Too Meshy!

Meshy is an image or text prompt to 3D model software we learned about at GDC conference in March 2025.

The models have over 100k faces making the models unusable in any other 3D program, and the animation capabilities are “not there yet.”

170,000

faces!!

Meshy was super fast and pretty good at understanding prompts and image references and has a bunch of premade animations…

Rigging was super fun to do in Meshy because the AI features took some of the complicated expertise out of rigging, but as you can see above, it was not ready for primetime post rigging.

Fun and easy rigging with AI assist.

Adobe Firefly & Midjourney

Below are experiments using still drawings fed into Firefly and Midjourney.

Unlike OpenArtAI, these programs are not designed to train train a singularly unique character. I played around with it and you can not feed it the 20+ images needed to train a model and get consistent character renderings.

It’s super interesting to observe where the programs “fill in” information.

Claims to be able to train a specific character model and then be able to produce video and still art based on text prompts.

You can upload up to 100 images of your character in order for the software to get the full picture and create an accurate model to work from.

We would be able to animate and create character art and backgrounds at a speed that is actually unimaginable.

We would be able to deliver on the long lists of ideas generated by our partners like Video, Product Marketing, Techbook, Experience and Atlas.

It would resolve our issue of using humans (the wrong proportions) to animate some of the characters that are short and wide. This is an ongoing issue.

We may be able to finally animate Eduardo, who is in a wheelchair.

We can use it to animate Disco, the dog.

I have been using AI art tools for two years. I think there is a misunderstanding of what they “copy and share” with others as they intake and regurgitate the art we feed them.

If I ask Midjourney directly to put Hello Kitty in a kimono, I do get Hello Kitty. I could also simply go to the Sanrio website and copy from there or any other of many repositories of copyrighted art featuring Hello Kitty.

If I ask Midjourney for a cute helpful robot, it’s not going to serve up the DE robot to another user. It may share the glitter surface with a user prompting for a glittering tennis ball, or it may reference the reflection of the black glass tummy screen when promoted by a stranger to create a black glass coffee table, but it would not serve up our characters in any way as a whole. It’s just not how these programs are designed to work.

I’d argue that even if it did give me a perfect Hello Kitty, that will not matter in the future of IP and what is valuable about IP. We are way past the point of keeping images of characters to ourselves. Disney is unable to recall the millions of images generated by Midjourney (they tried) and we would never be able to keep someone from recreating Disco if we wanted to. Additionally, it’s getting better every day.

We needed to evolve beyond this way of thinking so we can “do more with less” at Discovery Education especially given our financial restraints. Companies will need to adapt to the way these programs are designed to work and embrace the rapid capabilities in order to compete in EdTech when it comes to student engagement.

Hello Kitty in a Kimono- March 10, 2024

(88 weeks ago)

Hello Kitty in a Kimono- September 2, 2025